JUPITER

JUPITER is located at the Forschungszentrum Jülich campus in Germany and operated by the Jülich Supercomputing Centre. It is based on Eviden’s BullSequana XH3000 direct liquid cooled architecture. JUPITER is Europe’s first Exascale supercomputer, capable of delivering 1 ExaFLOP of computing power.

| Compute partitions: | Booster Module (highly-scalable GPU accelerated) Cluster Module (general-purpose, high memory bandwidth) |

| Central Processing Unit (CPU): | The Cluster Module utilises the SiPearl Rhea1 processor (ARM, HBM), integrated into the BullSequana XH3000 platform. |

| Graphics Processing Unit (GPU): | The Booster Module utilises NVIDIA technology, integrated into the BullSequana XH3000 platform. |

| Storage capacity: | JUPITER provides a 20-petabyte partition of ultra-fast flash storage. The spinning disk and backup infrastructure capacity will be procured separately and subject to change. |

| Applications: | JUPITER is designed to tackle the most demanding simulations and compute-intensive AI applications in science and industry. Applications include training large neural networks like language models in AI, simulations for developing functional materials, creating digital twins of the human heart or brain for medical purposes, validating quantum computers, and high-resolution simulations of climate that encompass the entire Earth system. |

| TOP500 rankings | Booster module: #4 globally (November 2025 ranking) |

| Green500 ranking: | Booster module: #14 globally (November 2025 ranking) |

For information about pay per use conditions, please contact the hosting site directly: Jülich Supercomputing Centre (JSC)

LUMI

Lumi is a pre-exascale EuroHPC supercomputer located in Kajaani, Finland. It is a Cray EX supercomputer supplied by Hewlett Packard Enterprise (HPE) and hosted by CSC – IT Center for Science.

More technical information regarding LUMI, can be found here.

| Compute partitions: | GPU partition (LUMI-G), x86 CPU-partition (LUMI-C), data analytics partition (LUMI-D), container cloud partition (LUMI-K) |

| Central Processing Unit (CPU): | The LUMI-C partition features 64-core next-generation AMD EPYC™ CPUs |

| Graphics Processing Unit (GPU): | LUMI-G based on the future generation AMD Instinct™ GPU |

| Storage capacity: | LUMI’s storage system consists of three components. First, there is a 7-petabyte partition of ultra-fast flash storage, combined with a more traditional 80-petabyte capacity storage, based on the Lustre parallel filesystem, as well as a data management service, based on Ceph and being 30 petabytes in volume. In total, LUMI has a storage of 117 petabytes and a maximum I/O bandwidth of 2 terabytes per second. |

| Applications: | AI, especially deep learning, and traditional large scale simulations combined with massive scale data analytics in solving one research problem |

| TOP500 ranking: | #9 globally (November 2025) |

| Green500 ranking: | #38 globally (November 2025) |

| Other details: | LUMI takes over 150m2 of space, which is about the size of a tennis court. The weight of the system is nearly 150 000 kilograms (150 metric tons) |

For information about pay per use conditions, please contact the hosting site directly: CSC IT - Centre for Science

| Compute partitions: | GPU-partition (Booster) delivering 240 petaflops x86 CPU-partition (Data-Centric) delivering 9 petaflops and featuring DDR5 Memory and local NVMe. | |

| Central Processing Unit (CPU): | Intel Ice-Lake (Booster), Intel Sapphire Rapids (Data-Centric) | |

| Graphics Processing Unit (GPU): | 13824 "Da Vinci" GPUs (based on NVIDIA Ampere architecture) delivering up to 10 exaflops of FP16 Tensor Flow AI performance | |

| Storage capacity: | Leonardo is equipped with over 100 petabytes with state-of-the-art hard disk drives and 5 petabytes with full flash and NVMe technologies. | |

| Applications: | The system targets: modular and scalable computing applications, data analysis, as well as interactive, urgent and cloud computing applications | |

| TOP500 ranking: | #10 globally (November 2025) | |

| Green500 ranking: | #78 globally (November 2025) | |

| Other details: | Leonardo is hosted in the premises of the Tecnopolo di Bologna. The area devoted to the EuroHPC Leonardo system includes 1240 sqm of computing room floor space and 900 sqm of ancillary space. |

For information about pay per use conditions, please contact the hosting site directly: CINECA

MARENOSTRUM 5

MareNostrum 5 is a pre-exascale EuroHPC supercomputer located in Barcelona, Spain. The system is supplied by Bull SAS combining Bull Sequana XH3000 and Lenovo ThinkSystem architectures. MareNostrum 5 is hosted by Barcelona Supercomputing Center (BSC).

More technical information regarding Marenostrum 5, can be found here.

| Compute partitions: | GPP (General purpose partition), ACC (Accelerated partition), NGT GPP (Next Generation Technology General Purpose partition and NGT ACC (Next Generation Technology General Purpose partition). Additional smaller partitions for pre- and post-processing. |

| Central Processing Unit (CPU): | The GPP, ACC partitions both rely on Intel Sapphire Rapids CPUs. NGT ACC is based on NVIDIA GB200 and the NGT GPP is based on NVIDIA Grace. |

| Graphics Processing Unit (GPU): | The ACC partition is based on NVIDIA Hopper whereas the NGT ACC partition is built on NVIDIA GB200. |

| Storage capacity: | MareNostrum storage provides 248PB net capacity based on SSD/Flash and hard disks, and an aggregated performance of 1.2TB/s on writes and 1.6TB/s on reads. Long-term archive storage solution based on tapes will provide 402PB additional capacity. Spectrum Scale and Archive are used as parallel filesystem and tiering solution respectively. |

| Applications: | All the applications suit ideally MareNostrum5 thanks to its heterogeneous configuration, with a special focus on medical applications, drug discovery as well as digital twins (earth and human body), energy, etc. Its large general-purpose partition provides an environment well suited for most current applications that solve scientific/industrial problems. In addition, the accelerated partition provides an excellent environment for large scale simulations, AI and deep learning. |

| TOP500 ranking: | ACC: #14 globally (November 2025) GPP: #50 (November 2025) |

| Green500 ranking: | #48 globally (November 2025) |

| Other details: | MareNostrum 5 is located in BSC’s new facilities, next to the Chapel which is hosting previous systems. The datacenter has a total power capacity of 20MW, and cooling capacity of 17MW, with a PUE below 1,08. |

For information about pay per use conditions, please contact the hosting site directly: Barcelona Supercomputing Center (BSC)

MELUXINA

MeluXina is a petascale EuroHPC supercomputer located in Bissen, Luxembourg. It is supplied by Atos, based on the BullSequana XH2000 supercomputer platform and hosted by LuxProvide.

More technical information regarding MeluXina, can be found here.

| Compute partitions: | Accelerator - GPU (500 AI PetaFlops), Cluster (3 PetaFlops peak), Accelerator - FPGA and Large Memory Modules |

| Central Processing Unit (CPU): | AMD EPYC |

| Graphics Processing Unit (GPU): | NVIDIA Ampere A100 |

| Storage capacity: | 20 PetaBytes main storage with all-flash scratch tier over 600GB/s, Tape archival capabilities |

| Applications: | AI, Digital Twins, Traditional Computational workloads, Quantum simulation |

| TOP500 ranking: | #157 globally (November 2025) |

| Green500 ranking: | #87 globally (November 2025) |

| Other details: | Modular Supercomputer Architecture, Cloud Module for complex use cases and persistent services, Infiniband HDR interconnect, high speed links to the RESTENA NREN and GÉANT network, Luxembourg Internet Exchange and Public Internet |

For information about pay per use conditions, please contact the hosting site directly: LuxProvide

KAROLINA

Karolina is a petascale EuroHPC supercomputer located in Ostrava, Czechia. It is supplied by Hewlett Packard Enterprise (HPE), based on an HPE Apollo 2000Gen10 Plus and HPE Apollo 6500 supercomputers. Karolina is hosted by IT4Innovations National Supercomputing Center.

More technical information regarding Karolina, can be found here.

| Compute partitions: | The supercomputer consists of 6 main parts:

|

| Central Processing Unit (CPU): | More than 100,000 CPU cores and 250 TB of RAM |

| Graphics Processing Unit (GPU): | More than 3.8 million CUDA cores / 240,000 tensor cores of NVIDIA A100 Tensor Core GPU accelerators with a total of 22.4 TB of superfast HBM2 memory |

| Storage capacity: | More than 1 petabyte of user data with high-speed data storage with a speed of 1 TB/s |

| Applications: | Traditional Computational , AI, Big Data |

| TOP500 ranking: | GPU: #224 globally (November 2025) CPU: #450 globally (November 2025) |

| Green500 ranking: | GPU: #84 globally (November 2025) CPU: #153 globally (November 2025) |

For information about pay per use conditions, please contact the hosting site directly: IT4Innovations National Supercomputing Center

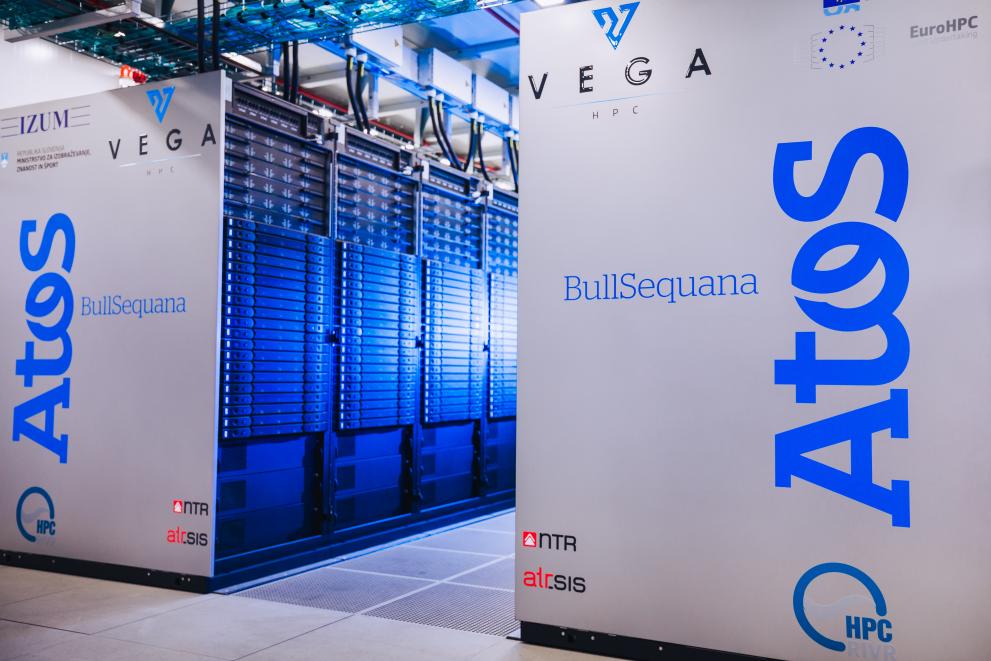

| Compute partitions: | CPU partition: 960 nodes with 2CPUs and 256GB memory/node (20% 1TB/node), 1x HDR100 & GPU partition: 60 nodes with 2CPUs and 512GB memory, 2x HDR100, 4x Nvidia A100/node |

| Central Processing Unit (CPU) : | 2040x CPUs AMD EPYC 7H12 (64c, 2.6-3.3GHz), 130.560 cores on CPU and GPU partition |

| Graphics Processing Unit (GPU): | 240x Nvidia A100 with 40 GB HBM2 (+4 on GPU login nodes), 6912 FP32 CUDA cores and 432 Tensor cores per GPU |

| Storage capacity: | High-performance NVMe Lustre (1PB), large-capacity Ceph (23PB) |

| Applications: | Traditional Computational, AI, Big Data/HPDA, Large-scale data processing |

| TOP500 ranking: | CPU: #335 globally (November 2025) GPU: #410 globally (November 2025) |

| Green500 ranking: | GPU: #274 globally (November 2025) CPU: #275 globally (November 2025) |

| Other details: | 6x 100 Gbit/s bandwidth for data transfers to other national and international computing centres, data processing throughput of more than 400GB/s with high-performance storage and 200GB/s with large-capacity storage |

For information about pay per use conditions, please contact the hosting site directly: IZUM

DISCOVERER

Discoverer is a petascale EuroHPC supercomputer located in Sofia, Bulgaria. It is supplied by Atos, based on the BullSequana XH2000 supercomputer and hosted by Sofia Tech Park.

More technical information regarding Discoverer, can be found here.

| Compute partitions: | One partition providing 1128 nodes, 4,44 petaflops |

| Central Processing Unit (CPU): | AMD EPYC 7H12 64core, 2.6GHz, 280W (Code name Rome) |

| Graphics Processing Unit (GPU): | 4x NVIDIA DGX H200 system with 32x NVIDIA H200 T GPUs, with 141GB of GPU memory each |

| Storage capacity: | DDN ES7990X ExaScaler (2 PB) and Cray ClusterStor E1000 (5 PB) (both Lustre storages), and Weka AI-optimized storage with direct GPU access (442 TB) |

| Applications: | n-silico drug discovery, structure-property predictions, and molecular discovery enhance material design and drug development. Climate forecasting, environmental modeling, and digital product formulation support decision-making. SLM, machine learning, and neural network training improve automation and build data-driven solutions |

| TOP500 ranking: | #289 globally (November 2025) |

| Green500 ranking: | #230 globally (November 2025) |

| Other details: | DISCOVERER is situated in Sofia Tech Park leading innovation hub, fostering collaboration between research institutions, startups, and technology companies. The park provides state-of-the-art laboratories, co-working spaces, and business incubators to support the development of cutting-edge technologies. |

For information about pay per use conditions, please contact the hosting site directly: DISCOVERER

| Compute partitions: | ARM Partition: 1632 nodes, 3.96 PFLops ; x86 Partition: 500 nodes, 1.86 PFLops ; Accelerated: 33 nodes, 1.55 PFLops |

| Central Processing Unit (CPU): | A64FX (ARM partition), AMD EPYC (x86 partitions) |

| Graphics Processing Unit (GPU): | 33 nodes, each with 4x Nvidia Ampere A100 40 GB or 80 GB |

| Storage capacity: | 430 TB High-speed NVMe partition, 10.6 PB high-speed based Parallel File System partition |

| Applications: | Traditional Computational, AI, Big Data |

| TOP500 ranking: | #323 globally (November 2025) |

| Green500 ranking: | #125 globally (November 2025) |

For information about pay per use conditions, please contact the hosting site directly: Deucalion

| Compute partitions: | CPU partition Accelerated partition |

| Central Processing Unit (CPU): | Virtual partition utilising 64 of 72 ARM cores of each GH200 superchip |

| Graphics Processing Unit (GPU): | Virtual partition utilizing 8 of 72 ARM cores and the H100 of each superchip |

| Storage capacity: | 1 PB High-performance NVMe storage and 10 PB usable capacity storage |

| Applications: | Traditional HPC, AI, Big Data/HPDA. |

ARRHENIUS

Arrhenius is currently being installed at the Linköping University campus in Sweden and operated by the National Academic Infrastructure for Supercomputing in Sweden. It is an HPE Cray EX-based system, primarily direct liquid cooled. Soon to be capable of delivering over 60 PetaFLOP of computing power.

| Compute partitions: | HPC CPU partition HPC GPU partition Sensitive data partition Persistent compute partition |

| Central Processing Unit (CPU): | The CPU partition utilises 424 AMD Turin 128-core CPUs. |

| Graphics Processing Unit (GPU): | The GPU partition utilises 1528 Nvidia Grace Hopper Superchips |

| Storage capacity: | Arrhenius provides a 29-petabyte parallel file system and separate storage systems for sensitive data and persistent compute. |

| Applications: | Arrhenius is designed to tackle demanding simulations and compute-intensive AI applications in science and industry. Applications include training neural network models in AI, personalised medicine, simulations for developing new materials, simulations in life science, simulating fluid dynamics and high-resolution simulations of climate. |

ALICE RECOQUE

Alice Recoque will be located at CEA’s supercomputing centre TGCC (Très Grand Centre de calcul du CEA) in Bruyères-le-Châtel, France, and operated by operated by the Jules Verne consortium, led by France through GENCI and the Commissariat à l’énergie atomique et aux énergies alternatives (CEA), with the participation of the Netherlands through SURF and Greece through GRNet. Its architecture will be based on the new Eviden Sequana XH3500 platform, integrating a unified compute partition with AMD Venice 256-core processors and next-generation AMD MI430x GPUs. It will be capable of delivering 1 ExaFLOP of computing power.

| Compute partitions: | Unified partition with memory coherent accelerated nodes allowing multi tenant execution of scalar and accelerated HPC/AI workloads Scalar partition based on general purpose sovereign CPUs |

| Central Processing Unit (CPU): | The Scalar partition is likely to use SiPEARL next gen ARM-based processors, each scalar node will be federated by a BULL BXIv3 link. Final composition and details to be announced mid 2026. The Unified partition will allow to use in a multi tenant way part of the host processor based on AMD EPYC™ Venice 256 cores with 1 TB of MDRIM for scalar workloads |

| Graphics Processing Unit (GPU): | The Unified partition will provide access to accelerated nodes, each with one AMD Venice 256 cores host processor and 4 AMD Instinct™ MI430X GPU with 432 GB of HBM4 memory. Each node will be federated by nine BULL BXIv3 400 Gbs links, one link for the CPU and 2 links per GPU. |

| Storage capacity: | To be announced later. |

| Applications: | As an Exascale system and a leadership AI supercomputer for "AI Factory France" Alice Recoque will address the convergence of HPC, AI and quantum computing. It will enhance climate modeling, accelerate innovation in materials and energy, enable digital twins for personalized medicine, and support next-gen European foundational and frontier AI models. It will also address the vast amount of data generated by scientific instruments such as telescopes, satellites as well as IoT devices, and AI applications, driving breakthroughs across multiple domains. It will also provide access to quantum accelerators to assess the potentials of hybrid HPC/quantum computing for specific workloads in quantum chemistry, optimisation or machine learning. |

*Expected performance

All systems display the real performance of the combined partitions and are ordered according to the last Top 500 ranking.